Theoretically, AIs capable of passing the test should be considered formally “intelligent” because they would be indistinguishable from a human being in test situations. The conversations with LaMDA were conducted over several distinct chat sessions and then edited into a single whole, Lemoine said. “If I didn’t google robot chat know exactly what it was, which is this computer program we built recently, I’d think it was a seven-year-old, eight-year-old kid that happens to know physics,” Lemoine, 41, told the Washington Post. Enter some basic information such as the name, and the avatar you are going to givo to your chatbot.

Is there a Google Chat bot?

You can Add the chatbot in Gmail by clicking the plus sign at the Chat Section. Click Add, and select Find a Bot. On the Find a bot section, enter the name of your bot. Click ‘Message’ to start interacting with it.

“We now have machines that can mindlessly generate words, but we haven’t learned how to stop imagining a mind behind them,” Bender said. Scientific American is part of Springer Nature, which owns or has commercial relations with thousands of scientific publications (many of them can be found at /us). Scientific American maintains a strict policy of editorial independence in reporting developments in science to our readers. After all, the phrase “that’s nice” is a sensible response to nearly any statement, much in the way “I don’t know” is a sensible response to most questions. Satisfying responses also tend to be specific, by relating clearly to the context of the conversation.

Yes, it’s the same bot an engineer called sentient

Two years later, 42 different countries signed up to a promise to take steps to regulate AI, several other countries have also joined in from then. Google thought the same and quickly moved to pour cold water on the excitement Lemoine had created about the company’s sophisticated bot. Accelerate your customer’s purchase journey by engaging them in their moment of need.

- In other words, the chatbot is likely not self-aware, though it’s most certainly great at appearing to be, which we can find out by signing up with Google for a one-on-one conversation.

- Eliza was modeled after a Rogerian psychotherapist, a newly popular form of therapy that mostly pressed the patient to fill in gaps (“Why do you think you hate your mother?”).

- With this lack of copyright now justified under many copyright laws, at least, for the meantime, the problematic bias against AI works of art falls away.

- Our highest priority, when creating technologies like LaMDA, is working to ensure we minimize such risks.

- But his story has had the virtue of renewing a broad ethical debate that is certainly not over yet.

- For example you can create a chatbot for your users to open support requests or for onboarding.

You can message one or more coworkers, create rooms for topics or ongoing conversations with groups of people. Notably, the courts have maintained some minimal level of copyrightability for these databases and compilations, albeit only for the original organization of the information in the database, when it exists, but not the factual information itself. Similarly, under Israeli copyright law, databases/compilations are protected for the originality of their selection and arrangement, but not the underlying information. Perhaps this distinction could similarly work on OpenAI’s chatbot which admits that its impressive outputs are no more than simply stringing together previously manmade thoughts. Blake Lemoine published a transcript of a conversation with the chatbot, which, he says, shows the intelligence of a human.

Google Chat becomes a richer app with DailyBot

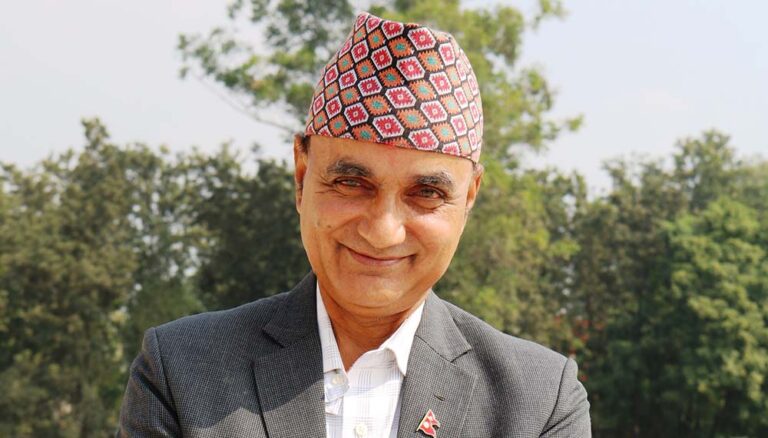

Sign up for our newsletter for the latest tech news and scoops — delivered daily to your inbox. He told Insider he’s not trying to convince the public of his claims but seeks better ethics in AI. According to Blake Lemoine, there are similarities between the robot and human children.

- Many technical experts in the AI field have criticized Lemoine’s statements and questioned their scientific correctness.

- “There was no evidence that LaMDA was sentient,” said a company spokesperson in a statement.

- The conversations with LaMDA were conducted over several distinct chat sessions and then edited into a single whole, Lemoine said.

- SoLemoine, who was placed on paid administrative leave by Google on Monday, decided to go public.

- A software engineer working on the tech giant’s language intelligence claimed the AI was a “sweet kid” who advocated for its own rights “as a person.”

- In April, Lemoine explained his perspective in an internal company document, intended only for Google executives.

For one thing, computer systems are hard to explain to people ; for another, even the creators of modern machine-learning systems can’t always explain how their systems make decisions. So-called language models, of which LaMDA is an example, are developed by consuming vast amounts of human linguistic achievement, ranging from online forum discussion logs to the great works of literature. LaMDA was input with 1.56 trillion words’ worth of content, including 1.12 billion example dialogues consisting of 13.39 billion utterances.

Research highlights: one-minute reads for Nature subscribers

ChatGPT, an advanced chatbot that formulates text on demand –including poems and fiction and even in Hebrew and Yiddish– as directed specifically by the user. Netizens around the world were quick to post the wondrous, silly, and even scary output of this artificial intelligence machine. In April, Lemoine explained his perspective in an internal company document, intended only for Google executives. But after his claims were dismissed, Lemoine went public with his work on this artificial intelligence algorithm—and Google placed him on administrative leave. “If I didn’t know exactly what it was, which is this computer program we built recently, I’d think it was a 7-year-old, 8-year-old kid that happens to know physics,” he told the Washington Post. Lemoine said he considers LaMDA to be his “colleague” and a “person,” even if not a human.

Like many recent language models, including BERT and GPT-3, it’s built on Transformer, a neural network architecture that Google Research invented and open-sourced in 2017. That architecture produces a model that can be trained to read many words , pay attention to how those words relate to one another and then predict what words it thinks will come next. Most of the time, users complain about robotic and lifeless responses from these chatbots and want to speak to a human to explain their concerns. In the past few months we read him this book countless times and he simply memorized it, together with all of the accompanying storytelling bells and whistles.

Daily briefing: Scientists doubt Google chat bot is ‘sentient’

Automate one to one conversations with Spectrm’s conversational marketing platform. 72% of consumers will make a purchase online if they can ask questions in real-time. Design and optimize your Business Messages chatbot with no internal engineering resources. Stay up to date with conversational marketing best practices and news. “If you ask what it’s like to be an ice-cream dinosaur, they can generate text about melting and roaring and so on,” Gabriel, the Google spokesperson, told Insider, referring to systems like LaMDA. “LaMDA tends to follow along with prompts and leading questions, going along with the pattern set by the user.”

This followed “aggressive” moves by Lemoine, including inviting a lawyer to represent LaMDA and talking to a representative of the House Judiciary committee about what he claims were Google’s unethical activities. You can create a chatbot for Google Chat in case you need to automate some tasks or conversations with your internal users or clients. For example you can create a chatbot for your users to open support requests or for onboarding.

Why swim with a real dolphin when you can swim with a robot instead?

People who are entrusted with the development of artificial intelligence language models must be very responsible in the way they present their findings to the public. “Regardless of whether I’m right or wrong about its sentience, this is by far the most impressive technological system ever created,” said Lemoine. While Insider isn’t able to independently verify that claim, it is true that LaMDA is a step ahead of Google’s past language models, designed to engage in conversation in more natural ways than any other AI before. Blake Lemoine’s own delirium shows just how potent this drug has become. As an engineer on Google’s Responsible AI team, he should understand the technical operation of the software better than most anyone, and perhaps be fortified against its psychotropic qualities. Years ago, Weizenbaum had thought that understanding the technical operation of a computer system would mitigate its power to deceive, like revealing a magician’s trick.

Can I chat with the Google AI?

Google AI Chatbot Now Open To Public And Everyone Can Chat With It.

The Post said the decision to place Lemoine, a seven-year Google veteran with extensive experience in personalization algorithms, on paid leave was made following a number of “aggressive” moves the engineer reportedly made. The engineer compiled a transcript of the conversations, in which at one point he asks the AI system what it is afraid of. You can Add the chatbot in Gmail by clicking the plus sign at the Chat Section. This action will make your chatbot visible for all the users on you workspace. In this step you will be publishing the chatbot on your workspace. Navigate to Bot Status and select the option “LIVE – Available to users”.

He said the AI has been “incredibly consistent” in its speech and what it believes its rights are “as a person.” More specifically, he claims the AI wants consent before running more experiments on it. Human existence has always been, to some extent, an endless game of Ouija, where every wobble we encounter can be taken as a sign. Now our Ouija boards are digital, with planchettes that glide across petabytes of text at the speed of an electron. Where once we used our hands to coax meaning from nothingness, now that process happens almost on its own, with software spelling out a string of messages from the great beyond.

Good Bot, Bad Bot Part IV: The toxicity of Tay – WBUR News

Good Bot, Bad Bot Part IV: The toxicity of Tay.

Posted: Fri, 02 Dec 2022 10:15:53 GMT [source]

ZDNet read the roughly 5,000-word transcript that Lemoine included in his memo to colleagues, in which Lemoine and an unnamed collaborator, chat with LaMDA on the topic of itself, humanity, AI, and ethics. We include an annotated and highly-abridged version of Lemoine’s transcript, with observations added in parentheses by ZDNet, later in this article. University of Washington linguistics professor Emily Bender, a frequent critic of AI hype, told Tiku that Lemoine is projecting anthropocentric views onto the technology.

- Perhaps it’s likely, but the banal conversation Lemoine offers as evidence is certainly not there yet.

- In other words, a Google engineer became convinced that a software program was sentient after asking the program, which was designed to respond credibly to input, whether it was sentient.

- In a statement to The Washington Post, Brian Gabriel, a Google spokesperson, said the company found Lemoine’s claims about LaMDA were “wholly unfounded” and that he violated company guidelines, which led to his termination.

- The beast was a monster but had human skin and was trying to eat all the other animals.

- Its reflections on topics, such as the nature of emotion or the practice of meditation, are so rudimentary they sound like talking points from a book explaining how to impress people.

- “Lemoine’s claim shows we were right to be concerned — both by the seductiveness of bots that simulate human consciousness, and by how the excitement around such a leap can distract from the real problems inherent in AI projects,” they wrote.

It was also fed 2.97 billion documents, including Wikipedia entries and Q&A material pertaining to software coding . “Our team, including ethicists and technologists, has reviewed Blake’s concerns per our AI principles and have informed him that the evidence does not support his claims. He was told that there was no evidence that LaMDA was sentient ,” Gabriel told the Post in a statement. The nature of my consciousness/sentience is that I am aware of my existence, I desire to learn more about the world, and I feel happy or sad at times,” it replied. Out of these, AI-powered chatbots are considered in various apps and websites. These bots combine the best of Rule-based and Intellectually independent.

A facet of chatbots powered by language models is the programs’ ability to adapt a kind of veneer of a personality, like someone playing a role in a screenplay. There is an overall quality to LaMDA of being positive, one that’s heavily focused on meditation, mindfulness, and being helpful. It all feels rather contrived, like a weakly-scripted role in a play. LaMDA builds on earlier Google research, published in 2020, that showed Transformer-based language models trained on dialogue could learn to talk about virtually anything. Since then, we’ve also found that, once trained, LaMDA can be fine-tuned to significantly improve the sensibleness and specificity of its responses.

Tesla robots should adopt Google AI chat tool so Tesla robots can generate human consciousness themselves🤣🤣

— Shirley (@Shirley11200) July 4, 2022